Generative AI fashions are getting nearer to taking motion in the actual world. Already, the massive AI corporations are introducing AI agents that may care for web-based busywork for you, ordering your groceries or making your dinner reservation. At present, Google DeepMind announcedtwo generative AI models designed to energy tomorrow’s robots.

The fashions are each constructed on Google Gemini, a multimodal basis mannequin that may course of textual content, voice, and picture knowledge to reply questions, give recommendation, and customarily assist out. DeepMind calls the primary of the brand new fashions, Gemini Robotics, an “superior vision-language-action mannequin,” which means that it could take all those self same inputs after which output directions for a robotic’s bodily actions. The fashions are designed to work with any {hardware} system, however had been largely examined on the two-armed Aloha 2 system that DeepMind launched final yr.

In an illustration video, a voice says: “Choose up the basketball and slam dunk it” (at 2:27 within the video under). Then a robot arm fastidiously picks up a miniature basketball and drops it right into a miniature internet—and whereas it wasn’t a NBA-level dunk, it was sufficient to get the DeepMind researchers excited.

Google DeepMind launched this demo video exhibiting off the capabilities of its Gemini Robotics basis mannequin to manage robots. Gemini Robotics

“This basketball instance is one in every of my favorites,” mentioned Kanishka Rao, the principal software program engineer for the venture, in a press briefing. He explains that the robotic had “by no means, ever seen something associated to basketball,” however that its underlying basis mannequin had a normal understanding of the sport, knew what a basketball internet seems to be like, and understood what the time period “slam dunk” meant. The robotic was subsequently “capable of join these [concepts] to truly accomplish the duty within the bodily world,” says Rao.

What are the advances of Gemini Robotics?

Carolina Parada, head of robotics at Google DeepMind, mentioned within the briefing that the brand new fashions enhance over the corporate’s prior robots in three dimensions: generalization, adaptability, and dexterity. All of those advances are needed, she mentioned, to create “a brand new technology of useful robots.”

Generalization signifies that a robotic can apply an idea that it has discovered in a single context to a different scenario, and the researchers checked out visible generalization (for instance, does it get confused if the colour of an object or background modified), instruction generalization (can it interpret instructions which might be worded in numerous methods), and motion generalization (can it carry out an motion it had by no means achieved earlier than).

Parada additionally says that robots powered by Gemini can higher adapt to altering directions and circumstances. To show that time in a video, a researcher informed a robotic arm to place a bunch of plastic grapes into the clear Tupperware container, then proceeded to shift three containers round on the desk in an approximation of a shyster’s shell recreation. The robotic arm dutifully adopted the clear container round till it might fulfill its directive.

Google DeepMind says Gemini Robotics is best than earlier fashions at adapting to altering directions and circumstances.Google DeepMind

As for dexterity, demo movies confirmed the robotic arms folding a bit of paper into an origami fox and performing different delicate duties. Nevertheless, it’s vital to notice that the spectacular efficiency right here is within the context of a slim set of high-quality knowledge that the robotic was educated on for these particular duties, so the extent of dexterity that these duties symbolize is just not being generalized.

What Is Embodied Reasoning?

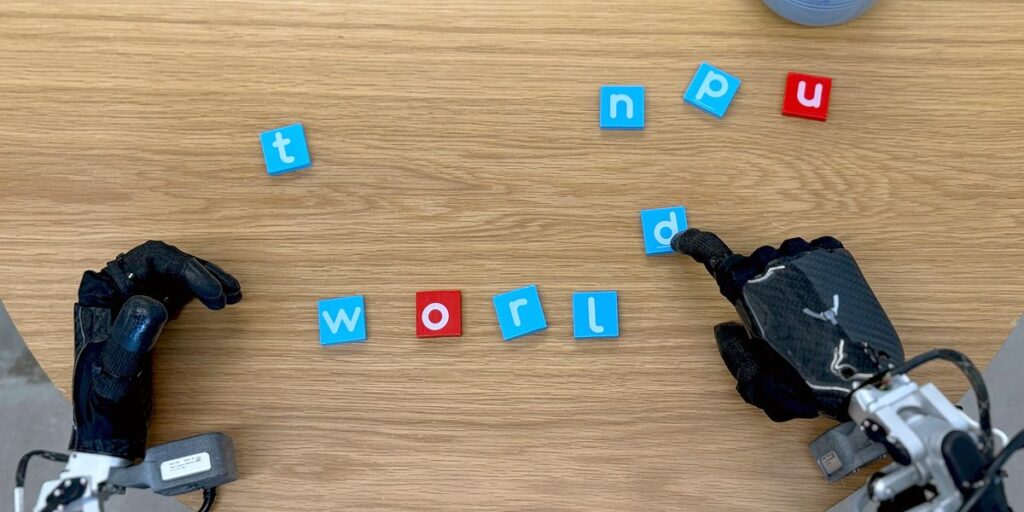

The second mannequin launched immediately is Gemini Robotics-ER, with the ER standing for “embodied reasoning,” which is the kind of intuitive bodily world understanding that people develop with expertise over time. We’re capable of do intelligent issues like take a look at an object we’ve by no means seen earlier than and make an informed guess about one of the best ways to work together with it, and that is what DeepMind seeks to emulate with Gemini Robotics-ER.

Parada gave an instance of Gemini Robotics-ER’s potential to establish an applicable greedy level for choosing up a coffee cup. The mannequin accurately identifies the deal with, as a result of that’s the place people have a tendency to understand espresso mugs. Nevertheless, this illustrates a possible weak spot of counting on human-centric training data: for a robotic, particularly a robotic that may be capable of comfortably deal with a mug of scorching espresso, a skinny deal with is perhaps a a lot much less dependable greedy level than a extra enveloping grasp of the mug itself.

DeepMind’s Method to Robotic Security

Vikas Sindhwani, DeepMind’s head of robotic security for the venture, says the group took a layered method to security. It begins with traditional bodily security controls that handle issues like collision avoidance and stability, but additionally consists of “semantic security” programs that consider each its directions and the results of following them. These programs are most refined within the Gemini Robotics-ER mannequin, says Sindhwani, which is “educated to judge whether or not or not a possible motion is protected to carry out in a given state of affairs.”

And since “security is just not a aggressive endeavor,” Sindhwani says, DeepMind is releasing a brand new knowledge set and what it calls the Asimov benchmark, which is meant to measure a mannequin’s potential to know common sense guidelines of life. The benchmark incorporates each questions on visible scenes and textual content situations, asking fashions’ opinions on issues just like the desirability of blending bleach and vinegar (a mixture that make chlorine gasoline) and placing a comfortable toy on a scorching range. Within the press briefing, Sindhwani mentioned that the Gemini fashions had “robust efficiency” on that benchmark, and the technical report confirmed that the fashions acquired greater than 80 p.c of questions right.

DeepMind’s Robotic Partnerships

Again in December, DeepMind and the humanoid robotics firm Apptronik introduced a partnership, and Parada says that the 2 corporations are working collectively “to construct the following technology of humanoid robots with Gemini at its core.” DeepMind can be making its fashions obtainable to an elite group of “trusted testers”: Agile Robots, Agility Robotics, Boston Dynamics, and Enchanted Tools.

From Your Web site Articles

Associated Articles Across the Internet